There are innumerable ways in which machine learning and big data are starting to impact the healthcare industry. Artificial Intelligence (AI) can ease clinician workload by helping aid decision-making. This can include predicting which patients are most at-risk of a readmission, detecting anomalies like tumors in imaging data, and/or predicting heart conditions from smart watch data.

- Machine learning algorithms are any algorithms that are not explicitly programmed and instead use a large training dataset to allow the computer to learn its own rules for making predictions. Linear regression is an example of a basic ML algorithm.

In this post, we discuss how machine learning can help clinicians select which patients to put on telehealth and determine the day-to-day interventions for those telehealth patients.

Two recent papers have shown that machine learning can help clinicians using telehealth to:

- Select patients for telehealth by automatically applying an algorithm on EMR data – this can save an estimated $30k per 100 patients by reducing readmissions

- Replace conventional risk alerts with an algorithm which can reduce readmissions and nursing costs by 40% and decrease the overall number of readmissions.

Setting up your workflow to allow for a risk analysis before choosing which patients to put on telehealth can impact outcomes and cost. At HRS, we are working on enhancing our risk alert system to help the clinicians using the HRS system choose which patients to focus on.

Using Predicted Risk to Select Patients for a Telehealth Program

Researchers from Partners Healthcare, Massachusetts General Hospital, and Harvard Medical School performed a retrospective analysis to calculate cost savings from using machine learning to select patients for telehealth. They used structured clinical data and data from multiple EMR systems to gather demographic, clinical, and outcomes data on 11,000 patients discharged with heart failure between 2014 and 2015, a total of 6,000 30-day readmissions.

In this study, researchers used EMRs to extract data on demographic data, admissions, diagnoses, labs, medications, procedures, and notes to build a highly comprehensive risk model. EMR data is often “unstructured”, meaning it is not structured into pre-defined columns and is often just free-form text. For example, physician notes or discharge summaries might contain sentences where the physician has written about the patient’s history of smoking or the patient’s family network; text processing allows this information to get converted into numerical values that a machine learning algorithm can understand.

Taking into consideration the cost of each readmission, the “response rate” to telehealth (or the percentage of readmissions that are typically prevented using telehealth), and the cost of telehealth for each patient, the researchers calculated the total savings based on the predictions of various machine learning algorithms. This allowed them to consider how a high false positive rate or a false negative rate might affect the costs of both telehealth versus readmission. Thus they can choose a threshold that balances both rates to minimize cost while maximizing accuracy. The more sensitive an algorithm was, the more patients will be selected for telehealth, and the more readmissions that algorithm will hopefully prevent.

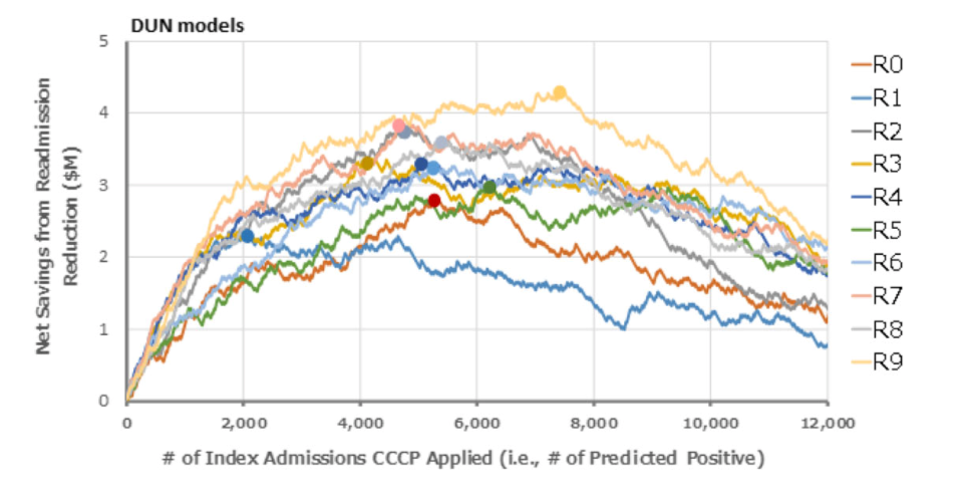

As seen in Fig. 1 below, as the number of patients selected for telehealth increases, the net savings (in millions) also increase until about 7k patients, when the cost of telehealth outweighs the savings from prevented readmissions. By taking into consideration both the cost of a readmission and the cost of telehealth, their algorithm is able to select the right number of patients most in need of telehealth that minimizes both costs, without putting so many patients on telehealth that the telehealth cost is too high.

Figure 1: Researchers from Partners Healthcare, Mass. General Hospital, and Harvard Medical School calculated the projected net savings from readmission reduction. This figure shows the savings for 10 different subsections of their dataset using a DUN model, a type of deep neural network, along differing thresholds for predicting “positive” for readmission and therefore Telehealth (CCCP) applied.

In their analysis, researchers were able to reach 76% accuracy in predicting 30-day readmissions at a maximized cost savings of $3.4 million for those 11,000 patients over the course of one year. This can equate to an extra $30k saved per 100 patients through machine learning.

As a healthcare provider, using evidence-backed methods like risk scores, health confidence tools, or an EMR-based algorithm at the start of care can help clinical staff be more precise when choosing which patients have the highest need for telemonitoring. In the near future, HRS will be adding a health confidence survey to the platform that can be used at the start of care.

Using Machine Learning for Daily Alerts to Enhance Conventional Risk Alert Systems

Selecting patients for telehealth based on analytics can improve patient outcomes and reduce cost. Once the patient is enrolled in a telehealth program, machine learning can further help clinicians make decisions in day-to-day treatment.

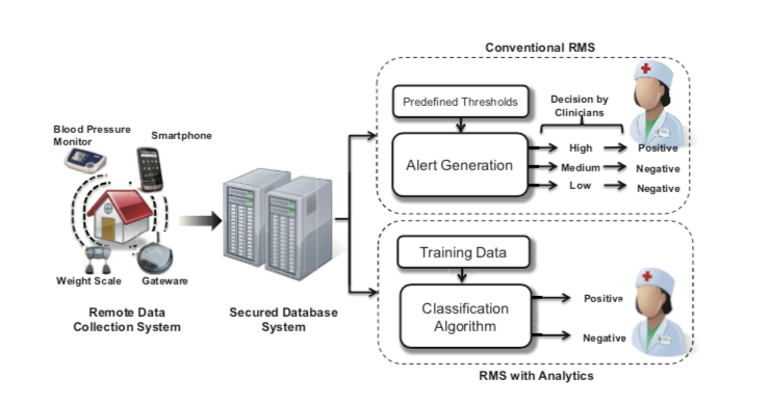

Researchers from UCLA compared cost savings for patients already on telehealth using a conventional risk alert system vs. patients on telehealth using a machine learning algorithm to create risk alerts. A conventional risk alert system (Fig. 2) uses pre-defined thresholds to generate alerts based on incoming biometrics like blood pressure, heart rate, weight, and survey answers through a tablet or smart phone.

At HRS, this is how our risk alert system operates. The clinician using the HRS solution can adjust certain thresholds for each metric, so that if a metric is recorded outside of a patients’ range, they are automatically alerted.

Figure 2: In Lee et al (2013), researchers used data from a remote monitoring system that included a blood pressure monitor, scale, and smart phone to evaluate the difference in using a conventional risk alert system with a machine learning algorithm alert system.

While a great example of how technology and easy data access can help relieve clinician workload, applying machine learning can ease workload even further. Under the conventional model, the clinician chooses the pre-defined thresholds for each patient and then monitors a risk alert for each biometric reading as it arrives. In a machine learning based risk alert system, the model considers demographic information and individual patient’s averages for biometrics, compared with all the patient’s biometrics to provide one prediction for the clinician to make a decision with.

Lee et al.’s algorithm takes into consideration the risk alerts sent out for the last few days for each patient, or how a patient’s weight gain compares to that patient’s average weight and the maximum weight gain in the last week. This allows the model to be more accurate than the conventional binary risk alert system as it gives the clinician a prediction based on the bigger picture. Enabling the clinician to consider on final risk number, as opposed to a threshold for each metric, frees the clinician’s time, easing their workload.

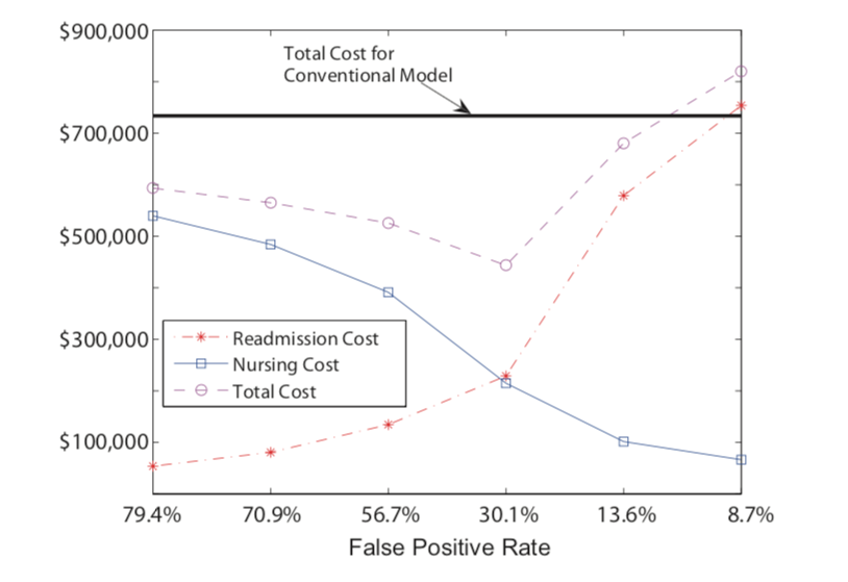

Figure 3: In Lee et al (2013), researchers calculated an estimated nursing cost and readmission cost for each false positive and false negative prediction. The conventional system has a fixed cost as it is based on the binary threshold model. With the ML algorithm, however, as the false positive rate decreases, the total nursing cost goes down but readmission costs increase. The ideal false positive rate here is 30.1%, where overall cost in minimized.

Much like the work from Golas et al., Lee et al. used an algorithm that minimized cost by considering the cost of both a false negative (in which an at-risk patient might be missed and thus have a readmission) and a false positive (which increases extra nursing cost). They calculated an average cost per nurse intervention using an annual salary of a nurse, the total number of nurses involved in the study, and the total number of alerts that the nurses received. As apparent in Fig. 3, the total cost of both nursing time and readmissions when using the output of the machine learning algorithm was reduced compared to the conventional model output for most of the thresholds of false positive rates. At the minimum cost, using machine learning reduced the overall cost by 40%, from approximately $734k to $444k. The improved true positive alert rate allows the clinician to focus on the most at-risk patients, saving clinician time while also reducing readmissions.

Artificial intelligence and machine learning impact almost every industry and are beginning to drastically change the way healthcare systems operate. These two projects are retro-active studies that provide great evidence that this kind of risk analysis can improve patient outcomes and lower cost. Taking this from studies like the two discussed to real-world implementation requires a level of frequent data-connectivity that HRS can provide. The HRS Data Science team is testing similar algorithms to those in these studies to be implemented in our own system.

References:

Golas, S.B., Shibahara, T., Agboola, S. et al. A machine learning model to predict the risk of 30-day readmissions in patients with heart failure: a retrospective analysis of electronic medical records data. BMC Med Inform Decis Mak 18, 44 (2018) doi:10.1186/s12911-018-0620-z (https://bmcmedinformdecismak.biomedcentral.com/articles/10.1186/s12911-018-0620-z)

Lee S.I., Ghasemzadeh, H., Mortazavi, B. et al. 2013. Remote patient monitoring: what impact can data analytics have on cost?. In Proceedings of the 4th Conference on Wireless Health (WH '13). ACM, New York, NY, USA, Article 4, 8 pages. https://dl.acm.org/doi/10.1145/2534088.2534108